Every time you, your family or friends upload a photo or video to a social media platform, facial recognition algorithms learn more about who you are, who you know and where you are.

To account for people’s privacy concerns, researchers at the University of Toronto (U of T) have developed an algorithm that can disrupt facial recognition systems, and prohibit the systems from learning more about you.

The full study, explaining the algorithm, is available here.

“We are able to fool a class of state-of-the-art face detection algorithms by adversarially attacking them,” said Avishek Bose, a graduate student in the Department of Electrical and

Computer Engineering at U of T and co-author of the study.

“Personal privacy is a real issue as facial recognition becomes better and better,” Parham Aarabi, associate professor in the Department of Electrical and Computer Engineering at U of T and co-author of the study, said in a statement. “This is one way in which beneficial anti-facial-recognition systems can combat that ability.”

The technique

The team’s solution involves making two artificial intelligence algorithms, a face detector and an adversarial generator, compete against each other, explained Bose.

The face detector works to identify faces, and the adversarial generator works entirely to disrupt the face detector.

“The face detector has no knowledge about the existence of the adversarial generator, while the generator learns over time how to successfully fool the detector,” said Bose. “Initially the generator is very bad at doing so, but it learns through observing its mistakes, as determined by the output of the face detector.”

The adversarial generator eventually gets to a point where it is capable of fooling the face detector nearly 99 percent of the time.

Essentially, the researchers created an Instagram-like filter that can be applied to photos to protect personal privacy.

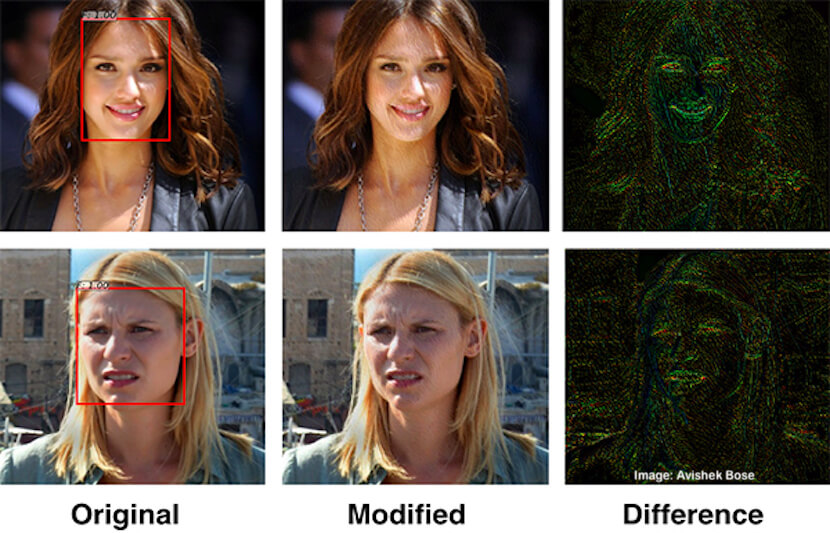

The new algorithm makes slight changes to the pixels in an image that are imperceptible to humans, but capable of fooling a state-of-the-art face detector.

“The disruptive AI can ‘attack’ what the neural net for the face detection is looking for,” Bose said in a statement. “If the detection AI is looking for the corner of the eyes, for example, it adjusts the corner of the eyes so they’re less noticeable. It creates very subtle disturbances in the photo, but to the detector they’re significant enough to fool the system.”

The researchers tested their system on a pool of 600 faces, including many different ethnicities, environments and lighting conditions.

The system effectively reduced the amount of faces recognition devices were originally able to detect from nearly 100 percent to only 0.5 percent.

Additionally, the technology can disrupt image-based search, ethnicity estimation, feature identification, emotion and any other face-based attributes that are capable of being extracted automatically.

Motivation

This study was sparked by Aarabi and Bose’s interest in researching the failure modes of modern deep-learning-based face detection and recognition algorithms.

“We initially started by testing these algorithms to extreme conditions such as blur, brightness, and dimness,” said Bose.

From their experiments, the researchers noticed that even pictures in decent conditions could occasionally cause the devices to fail, which connected well with some of the new literature on adversarial attacks, explained Bose.

“Essentially an adversarial attack causes small and often imperceptible changes to the input image, which causes the machine learning models to fail catastrophically,” said Bose.

“This is a known phenomena in many Deep Learning Models but it was never investigated in the context of Face Detection/Recognition systems.”

What’s next?

Aarabi and Bose wish to extend this style of attack to other classes of detectors. While this might be difficult or impossible in some cases, the primary goal is to understand and characterize the concept.

Once the researchers are successful in accomplishing this task against multiple classes of detectors, a tool could be made, but they are still far from this, said Bose.

“In the future you can imagine an app that applies this privacy filter to your photos before you upload it the internet,” he explained.

But at the moment, a lot of research must still be done.

“Our paper is just the starting point rather than anything close to the end goal,” said Bose.

Bose would like to note that while the researchers were able to fool a state-of-the-art face detection algorithm, they do not yet claim to be able to attack every single face-detecting device.

“This is a small step to a larger goal where we empower the user to protect their own privacy if they so choose,” said Bose.