A team of three roboticists from the Queensland University of Technology (QUT) has developed a faster and more accurate way for robots to grasp objects in real-time, opening doors for applications in both industrial and domestic settings.

Their paper was presented at the Carnegie Mellon University’s international robotics conference, Robotics: Science and Systems, last week.

Taking baby steps

No one can deny that robotics is the way of the future. According to the International Data Corporation, worldwide spending on robotics will reach $135 billion by 2019.

While robots have long been replacing human labor in assembly lines and computation and have even started to see, hear and think like humans in chess and debates, robots can’t quite grasp one thing — picking up an object in an unstructured environment.

Even for humans, although unnoticed, thousands of sensors in human fingers and complex network of circuitry inside human brains allow a three-month-old to pick up his toy rattle. However, he obviously does not need hours of training or hundreds of researchers to teach him.

But for robots, grasping objects in unstructured environments is extremely difficult. Yet, to be useful around humans, robots need to know how to adapt to changing environments.

The QUT team has developed a new method for robots to grasp items that move randomly more quickly and accurately.

“We are interested in creating robots that do useful tasks in the real world. I have been interested for a while in getting robots to robustly interact with the world, pick objects up and place them correctly,” said Jürgen Leitner, a postdoctoral research fellow at QUT’s Science and Engineering Faculty. “It turns out some things that are hard for us are simple for computers, such as chess, while some things that come easy for us are very tricky for robots, such as grasping.”

The new approach

Deep-learning techniques allow robots to learn from thousands of sizes and shapes to calculate the best way to grasp an unknown item in a static condition.

For example, a robot with convolutional neural network (CNN), the most widely used method for object recognition, samples and ranks objects individually and thinks of the best possible grip. However, this technique takes long computation time, even in static environments.

The researchers’ method, however, is based on a generative grasping convolutional neural network (GG-CNN), which directly scans its environment and maps each pixel it captures using a depth image, and is fast enough to grasp moving objects.

“The Generative Grasping Convolutional Neural Network approach works by predicting the quality and pose of a two-fingered grasp at every pixel. By mapping what is in front of it using a depth image in a single pass, the robot doesn’t need to sample many different possible grasps before making a decision, avoiding long computing times,” Douglas Morrison, a QUT doctoral researcher said in a statement.

While their previous robot, which won the Amazon Picking Challenge in 2017, simply looked into a bin of objects, calculated the best possible grip and blindly went in to pick them up, their updated version processes images of the objects within about 20 milliseconds.

According to Leitner, after picking up objects, their current robot places them at a fixed location and starts looking for the next item at the scene.

The unpredictable world

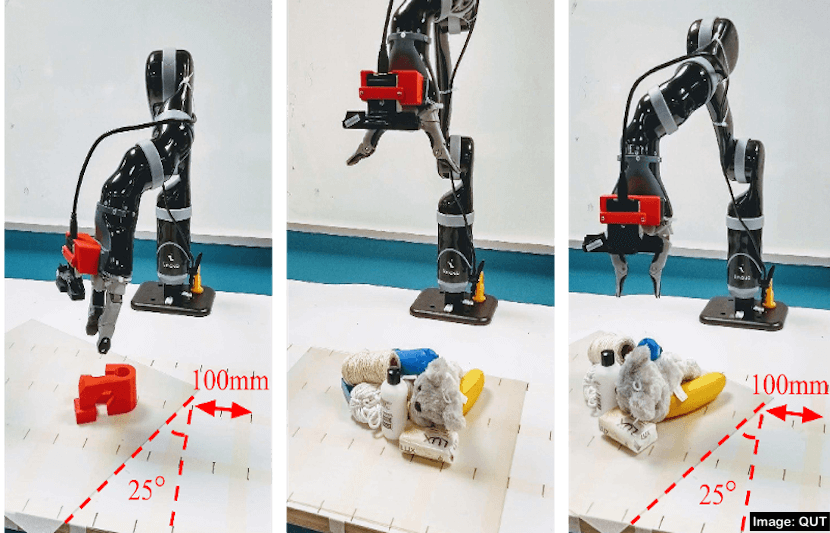

The researchers tested their new approach in multiple ways.

“There were various experimental setups, all with real world robot and objects,” said Leitner. “We tested with a range of ‘household objects,’ 3D printed objects, and we verified the approach with different noise levels in the robot controller.”

First, they performed 10 trials on grasping unseen objects with adversarial geometry and household objects under both static and moving environment.

While the robot achieved grasp success rate of 84 percent for adversarial geometry and 92 percent for household objects under static environment, it achieved 83 percent and 88 percent respectively under moving environment.

Second, they mixed 10 test objects in a box and poured it in a cluttered pile below the robot for it to grasp them one by one. They then performed 10 trials on grasping under both static and moving environment.

While the robot achieved grasp success rate of 87 percent under static environment, it achieved 81 percent under moving environment.

“Using this new method, we can process images of the objects that a robot views within about 20 milliseconds, which allows the robot to update its decision on where to grasp an object and then do so with much greater purpose. This is particularly important in cluttered spaces,” Letiner said in a statement.

The next step

The researchers hope to further improve their method for robots to be used more safely and widely around humans in industrial and domestic settings.

“This line of research enables us to use robotic systems not just in structured settings where the whole factory is built based on robotic capabilities,” Leitner said in a statement. “This has benefits for industry – from warehouses for online shopping and sorting, through to fruit picking. It could also be applied in the home, as more intelligent robots are developed to not just vacuum or mop a floor, but also to pick items up and put them away.”

They are also working on overcoming some of the limitations of the current approach, such as robotic vision and manipulation skills in a variety of settings, according to Leitner.

They are currently planning on organizing a “Robotic Tidy Up My Room Challenge.”