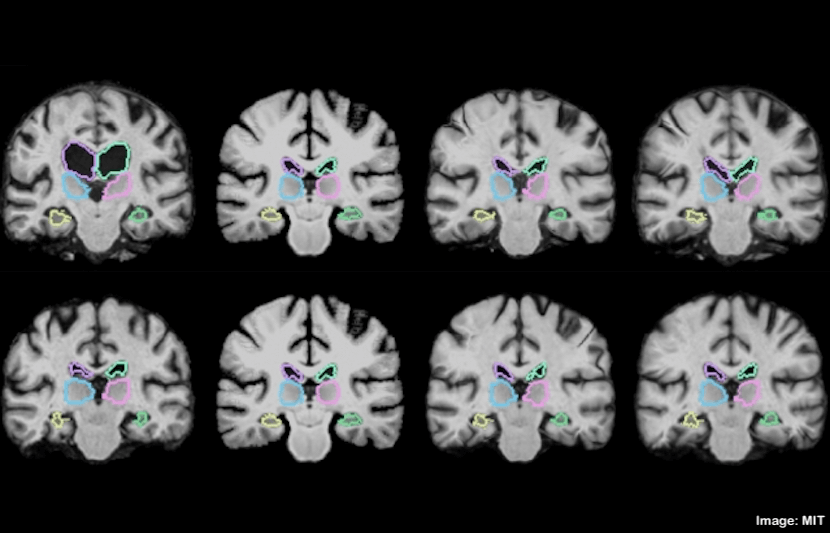

MIT researchers have built a machine-learning algorithm that can register MRI scans and other 3D images, and compare and analyze them in a matter of seconds.

This reduces the traditional runtime of two hours or more down to just a second.

Medical imaging, including MRI and CT scans, is not only a medical breakthrough, allowing doctors to thoroughly compare and analyze anatomical differences, but also a giant global business with nearly $50 billion spent on almost 40 million scans per year in the U.S. alone.

However, this widely used technology still takes up to two hours or more, slowing down clinical researches and limiting other potential applications.

“Traditional algorithms for medical image registration are prohibitively slow, making it unlikely that they will be used in many clinical settings,” said Adrian Dalca, co-author and a postdoctoral fellow at Massachusetts General Hospital and MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), and Guha Balakrishnan, co-author and a graduate student in CSAIL and the Department of Engineering and Computer Science (EECS).

The two papers describing their machine-learning algorithm were presented at the 2018 Conference on Computer Vision and Pattern Recognition (CVPR) last week, and will be presented at the 21st International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI) in Spain, Sept. 16-20.

Why does it take so long?

In MRI scans, hundreds of stacked 2-D images form massive 3D images, called “volumes,” that contain a million or more 3D pixels, called “voxels.”

For example, when scanning the brain, the technique produces many 2-D “slices” that are combined to form a 3D representation of the brain.

This is why it takes a long time to meticulously align all voxels in the first scan with those in the second. This process is made more challenging when the scans are from different machines, and becomes particularly slow when analyzing scans from large populations.

In cases where doctors need to learn about the variations in brain structures across hundreds of patients with a particular disease or condition, the scanning alone could potentially take hundreds of hours.

“You have two different images of two different brains, put them on top of each other, and you start wiggling one until one fits the other. Mathematically, this optimization procedure takes a long time,” Dalca said in a statement.

However, instead of starting from scratch when given a new pair of images, the researchers wondered what would happen if the algorithm learned from previous scans.

“After 100 registrations, you should have learned something from the alignment. That’s what we leverage,” Balakrishnan said in a statement.

An algorithm that learns

The new machine-learning algorithm, called the “VoxelMorph,” is powered by a convolutional neural network (CNN), a machine-learning approach commonly used for image processing.

These networks consist of many nodes that process image and other information across several layers of computation.

The researchers trained VoxelMorph on 7,000 publicly available MRI brain scans.

During training, VoxelMorph registered thousands of pairs of brain scan images. Its CNN component and spatial transformer, a modified computational layer, learned all the necessary information about how to align images by capturing similar groups of voxels in each pair of MRI scans, such as anatomical shapes common to both scans.

Then, when fed two new scans, VoxelMorph used the parameters it estimated during the training to quickly calculate the exact alignment of every voxel in the new scans.

In short, the algorithm’s CNN component gains all necessary information during training so that, during each new registration, the entire registration can be executed using one, easily computable function evaluation.

“The tasks of aligning a brain MRI shouldn’t be that different when you’re aligning one pair of brain MRIs or another,” Balakrishnan said in a statement.

“There is information you should be able to carry over in how you do the alignment. If you’re able to learn something from previous image registration, you can do a new task much faster and with the same accuracy.”

Unlike some other algorithms that also incorporates CNN models but require another algorithm to be run first to compute accurate registrations, VoxelMorph is “unsupervised,” which means it only needs the image data to compute accurate registration.

The result

Tested on 250 additional scans, VoxelMorph accurately registered all of them within two minutes using a traditional central processing unit (CPU), and in under one second using a graphics processing unit (GPU).

“In our initial tests and publications, we processed a large number of research images, to help gain insights into disease. In this scenario, the shortened VoxelMorph runtime can dramatically impact analysis,” said Dalca and Balakrishnan.

Then, the researchers further refined the VoxelMorph algorithm, so it guarantees the “smoothness” of each registration, meaning it doesn’t produce folds, holes, or general distortions in the composite image.

The researchers used a mathematical model, called a Dice score, a standard metric to evaluate the accuracy of overlapped images, to validate the algorithm’s accuracy.

They found that, across 17 brain regions, the refined VoxelMorph scored the same accuracy as a commonly used state-of-the-art registration algorithm, while providing runtime and methodological improvements.

The next step

In addition to analyzing brain scans, VoxelMorph allows for a wide range of new research and application.

At the very least, VoxelMorph allows for much more efficient care for patients. Doctors can now quickly align medical images of a particular patient taken before and after a surgery or treatment to assess the effect of the procedure.

“Whereas previous methods were prohibitively slow, the short VoxelMorph runtime promises to enable this comparison as soon as the scan is acquired,” said Dalca and Balakrishnan. “This is a direction of future work for us.”

Moreover, VoxelMorph can pave the way for image registration during operations.

Currently, when resecting a brain tumor, surgeons have to first scan a patient’s brain before and wait until after the operation to see if they’ve removed all the tumor. If the removal is incomplete, they have to go back to the operating room.

VoxelMorph, however, has the potential capacity to register scans in near real-time, so surgeons could have a much clearer picture on their progress.

“Today, they can’t really overlap the images during surgery, because it will take two hours, and the surgery is ongoing,” Dalca said in a statement. “However, if it only takes a second, you can imagine that it could be feasible.”

Currently, the researchers are running the algorithm on lung images. And they are hopeful to see further improvement.

“We are working on automatically evaluating the result of image alignment to help clinicians understand where pathologies might be present, registering low-quality stroke clinical scans that come from the hospital, and aligning lung images for patients with pulmonary disease,” said Dalca and Balakrishnan.